Keras Save Cannot Load Again Load a Weight File Containing

Function I: Saving and Loading of Keras Sequential and Functional Models

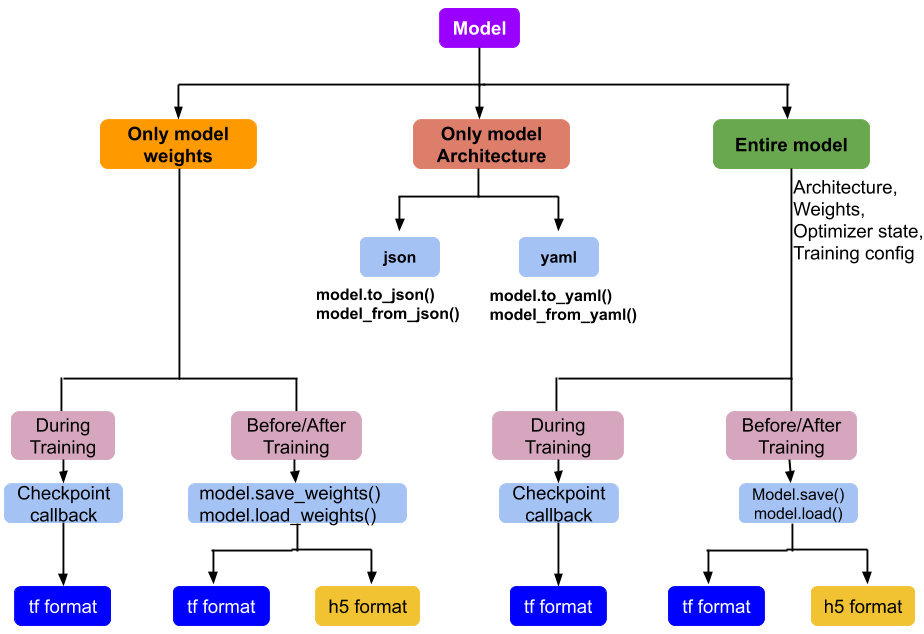

Saving unabridged model or just architecture or only weights

In TensorFlow and Keras, there are several ways to salvage and load a deep learning model. When you take as well many options, sometimes it will exist confusing to know which selection to select for saving a model. Moreover, if you have a custom metric or a custom layer then the complexity increases even more.

The main goal of this article is to explain different approaches for saving and loading a Keras model. If y'all are a seasoned automobile learning practitioner, so most probably you lot know which option to select. Y'all could skim through this commodity to run into if there is any value you are going to get by reading this commodity.

Outline of this article is as follows

- Overview of saving and loading of Keras models

- Saving and loading only architecture

- Saving and loading only weights

- Saving and loading entire models

1. Overview of Saving and Loading of Models

Before going into details, let usa see what a Keras model consist (the post-obit is from TensorFlow website)

An architecture, or configuration, which specifies what layers the model contain, and how they're connected

A set of weights values (the "country of the model")

An optimizer country (defined by compiling the model)

A set of losses and metrics (divers by compiling the model)

Depending on your requirements, y'all may want to salvage the architecture of the model only and share it with someone (squad fellow member or client). In another case, you lot may want to salvage only weights and resume the grooming afterwards from where you left off. Finally, you may want to save the entire model (architecture, weights, optimizer state, and training configuration) and share it with others. Good affair is that Keras allows us to do what nosotros wish.

The above flowchart is created to explain unlike options for saving and loading (i) model compages simply, (ii) model weights only, and (3) entire model (architecture, weights, optimizer country, and preparation configuration).

Scope of this article is to describe option (i) and (ii) with more details, and describe option (three) with a uncomplicated instance. As saving a complex Keras model with custom metrics and custom layers is not simple, nosotros will deal with it in another article (part Ii of this serial).

Model architecture can be saved either in json or yaml format whereas model weights or entire model tin can be saved in tf format or h5 format. In the following sections, more details on these options are provided with code examples.

two. Saving and loading just architecture

Model architecture includes Keras layers used in the model cosmos and how the layers are connected to each other. After defining model architecture, you can plot the model architecture using plot_model function under tensorflow.keras.utils. Executing the command plot_model(model, show_shapes=Truthful), plots the compages where yous can meet the proper noun of the layers, how the layers are connected to each other, and input and output shapes of data.

If your use example requires just saving model architecture, then yous could save it either injson format or inyaml format as shown below. The first command (model.to_json()) in the flowchart saves the architecture in json format and the second command ( model_from_json()) loads the architecture dorsum into the new model instantiated. A more detailed example lawmaking is provided below. Equally the arroyo is the same for json and yaml , the post-obit example is described for json format simply.

In this section, we will take a simple nomenclature model, railroad train it, salvage the architecture and weights, create a new model, load the saved architecture, load the saved weights, and finally compare the operation of the model before saving versus after loading the model compages.

The following instance uses a elementary Keras Sequential model with MNIST information to classify a given image of a digit betwixt 0 to 9. Later on training the model, the performance of the model was evaluated with ( x_test, y_test ) data.

The compages and weights of the model were saved to a disk as follows. In the following example, architecture was saved in json format but the code is very like to saving architecture in yaml format. Note that weights tin can be saved into 2 formats ( tf and h5 ) as shown below.

Every bit we have the model configuration, nosotros can create a new model (loaded_model_h5 ) with freshly initialized weights. Weights of the loaded_model_h5 are freshly initialized which means that they are different from the original model . Before evaluating the operation of the model, we need to load the weights that were saved earlier. One time you run the following code, you can notice that the performance is identical before saving and after loading the compages.

Similarly, the performance of the loaded_model_tf is identical to the original model . The complete code used in this department is shared hither.

three. Saving and loading only weights

As mentioned earlier, model weights can exist saved in two different formats tf and h5 . Moreover, weights can be saved either during model training or earlier/afterward training.

When do you demand to save just weights?

If you are training a model, you don't need to salve the entire model each and every epoch because at that place is no change in the architecture of the model. You merely need to relieve weights and biases to capture the learnable parameters of the model. Keras provides a couple of options to save the weights and biases either during the training of the model or before/subsequently the model preparation.

3.1 Saving weights before training

When you instantiate a model API (Sequential/Functional) and provide an compages (stack of Keras layers), the compages of the model is created and weights and biases are randomly initialized. At this betoken, you could compile (model.compile) the model and save the weights and biases. This is what I am calling as saving simply weights earlier grooming.

Annotation that and so far we haven't trained (model.fit) the model. Running model.predict with randomly initiated weights and biases is not useful as they were not updated through training.

When you instantiate anew_model equally shown beneath, weights are randomly initialized which are different from what nosotros had saved earlier using model.save_weights . If we don't load the saved weights into thenew_model and so the predictions are incorrect as the weights are very unlike.

The following example lawmaking demonstrates the difference in the prediction when you don't load the saved weights. Finally, when we load the saved weights using new_model.load_weights() then the predictions are identical to the original model .

3.2 Saving weights afterwards grooming

This is the approach most commonly used by users. After instantiating the model, information technology can be compiled and trained. As mentioned in department three.1, when you instantiate a model, weights become initialized randomly. During the training, these weights will get updated to optimize the performance of the model. Once you lot are happy with the model performance, you can save the weights using model.save_weights . Yous can estimate the functioning of the model by executing model.evaluate with the test data every bit shown below.

When you want to resume the training, you tin can instantiate the model architecture, compile the model and, load the weights using new_model.load_weights . Y'all tin can bank check the operation of the model before saving and afterward loading weights to the new_model . The performance is identical equally noticed in the post-obit example. The total code used in this Department is shared here.

three.3 Saving weights during training

Keras allows usa to salvage weights during model training through ModelCheckpoint callback.

But, why exercise we need to save weights during preparation?

- If your mode is small and takes only a few seconds to train the model, so nosotros don't demand to salve weights during the training. Just, what if your model is big and training takes hours or days and due to unexpected failure (ex. power failure) in the arrangement or out of memory (OOM) problems causes the preparation procedure to terminate.

- Practically, Machine Learning models will get new data continuously. We tin can add the new data to the training data and apply the latest checkpoint to retrain the model so that performance is improve

- Maybe y'all trained a model in a car and now you have a much bigger machine merely don't want to showtime the preparation from scratch.

What is checkpoint?

Checkpointing is a technique that provides mistake tolerance for computing systems. It basically consists of saving a snapshot of the application'south state, so that applications can restart from that point in example of failure. This is particularly important for the long running applications that are executed in the failure-prone computing systems. (from Wikipedia)

Keras has ModelCheckpoint callback, but what does it do?

- ModelCheckpoint captures the weights of the model or unabridged model

- It allows united states of america to specify which

metricto monitor, such equallylossoraccuratenesson the training or validation dataset. It can salvage weights automatically when growth in themetricsatisfies a prepare condition - It allows us to load the latest checkpoint to resume preparation where it was left off

- Model Checkpoint callback also allow us to save the best model or all-time model weights when you select

save_best_only= Truthful

The major use of ModelCheckpoint is to save the model weights or the entire model when there is whatsoever improvement observed during the training. The code beneath saves the model weights every epoch.

In the above example, nosotros set checkpoint_path = "training_1/cp-{epoch:04d}.ckpt" so that checkpoint file names automatically change depending on the save_freq statement. However, if you select save_best_only=Truthful so you lot can define a stock-still checkpoint_path equally checkpoint_path = "training_2/cp.ckpt" and this file is overwritten when the electric current model has meliorate performance when compared to the previous best model. The following code shows how to save_best_only .

ModelCheckpoint saves three unlike files (ane) checkpoint file, (2) index file and, (3) checkpoint data file. The three files are equally shown below.

checkpoint: This file contains the latest checkpoint file. The file in our example has model_checkpoint_path: "cp-0010.ckpt" and all_model_checkpoint_paths: "cp-0010.ckpt"

cp-0001.ckpt.information-00000-of-00001 contains the values for all the variables after the showtime epoch, without the architecture.

cp-0001.ckpt.index simply stores the list of variable names and shapes saved afterwards the start epoch. More details here

The arguments of ModelCheckpoint callback are as shown below (from the TensorFlow website). Try to play with these arguments to learn more than.

filepath: string or

PathLike, path to salvage the model file.filepathtin contain named formatting options, which will be filled the value ofepochand keys inlogs(passed inon_epoch_end). For example: iffilepathisweights.{epoch:02d}-{val_loss:.2f}.hdf5, then the model checkpoints will be saved with the epoch number and the validation loss in the filename.monitor: quantity to monitor.

verbose: verbosity mode, 0 or ane.

save_best_only: if

save_best_only=Truthful, the latest best model according to the quantity monitored volition not exist overwritten. Iffilepathdoesn't incorporate formatting options similar{epoch}thenfilepathvolition be overwritten by each new better model.mode: one of {car, min, max}. If

save_best_only=True, the decision to overwrite the electric current save file is fabricated based on either the maximization or the minimization of the monitored quantity. Forval_acc, this should bemax, forval_lossthis should bemin, etc. Inautoway, the direction is automatically inferred from the name of the monitored quantity.save_weights_only: if Truthful, and then only the model'due south weights will exist saved (

model.save_weights(filepath)), else the full model is saved (model.salve(filepath)).save_freq:

'epoch'or integer. When using'epoch', the callback saves the model afterward each epoch. When using integer, the callback saves the model at end of this many batches. If theModelis compiled withexperimental_steps_per_execution=N, then the saving criteria will be checked every Nth batch. Note that if the saving isn't aligned to epochs, the monitored metric may potentially be less reliable (it could reverberate as little as one batch, since the metrics get reset every epoch). Defaults to'epoch'.**kwargs: Additional arguments for backwards compatibility. Possible central is

period.

The Full code used in this department is here.

iv. Saving and loading entire models

Entire Keras model (compages + weights + optimizer state + compiler configuration) can be saved to a disk in ii formats (i) TensorFlow SavedModel ( tf ) format, and (ii) H5 format.

Moreover, entire Keras model can be saved either during training or earlier/after training the model. Nonetheless, we will discuss simple saving and loading an entire model subsequently grooming. In part Ii of this serial, we volition learn more nearly other use-cases.

model.save('MyModel',save_format='tf') saves entire model into tf format. This will create a folder named MyModel which contains

-

assetsdirectory may contain additional data used by the TensorFlow graph, such as vocabulary files, class names, and text files used to initialize vocabulary tables. -

saved_model.pbTensorFlow graph and training configuration and optimizer state -

variablesweights are saved in this directory

model.save('MyModel_h5',save_format='h5')saves unabridged model into h5 format. This will create a single file that contains everything required for you to reload afterward for restarting the preparation or reload to predict.

You can delete the model object as the object returned by tf.keras.models.load_model doesn't depend on the lawmaking that was used to create it. After loading you can check the functioning of the loaded_model and compare it with the performance of the original model. Performance before saving and afterward loading is identical. The full lawmaking of this example is provided here.

The above example is a very simple one without any custom metrics/losses or custom layers. In part II of this series, we volition see how to deal with saving and loading a footling more complex TensorFlow models.

5. Conclusions

Pinnacle 5 takeaways from this commodity are:

I. Keras has the power to save a model'southward architecture merely, model's weights only, or the entire model (architecture and weights)

II. Architecture can be serialized into json or yaml format. A new model can be created by using the model configuration that was serialized earlier. Yous can use model_from_json or model_from_yaml to create a new model.

Iii. A Keras model'southward weights can be saved either during the training or earlier/later on training.

Four. Keras ModelCheckpoint callback can be used to save the best weights of a model or save weights every Northward batches or every epoch

V. Model'southward weights or unabridged model tin exist saved into two formats (i) new SavedModel (likewise called as tf ) format, and (two) HDF5 (also h5 ) format. At that place are some differences betwixt these 2 formats (we will deal that in some other article) just general practice is to use tf format if you lot are using TensorFlow or Keras.

References

- Relieve and Serialize guide from TensorFlow website

- https://keras.io/api/callbacks/

- https://keras.io/api/models/model_saving_apis/

westhadioncoulne37.blogspot.com

Source: https://medium.com/swlh/saving-and-loading-of-keras-sequential-and-functional-models-73ce704561f4

0 Response to "Keras Save Cannot Load Again Load a Weight File Containing"

Post a Comment